From soon to be released books

Open and Distance Education in Australia, Europe and the Americas: National Perspectives in a Digital Age

Open and Distance Education in Asia, Africa and the Middle East: National Perspectives in a Digital Age

Book Editors

Adnan Qayyum & Olaf Zawacki-Richter

Countries and authors

- Australia – Colin Latchem

- Brazil – Frederic Litto

- Canada – Tony Bates

- China – Wei Li & Na Chen

- Germany – Ulrich Bernath & Joachim Stöter

- India – Santosh Panda & Suresh Garg

- Russia – Olaf Zawacki-Richter, Sergey Kulikov, Diana Püplichhuysen & Daria Khanolainen

- South Africa – Paul Prinsloo

- South Korea – Cheolil Lim, Jihyun Lee & Hyosun Choi

- Turkey – Yasar Kondaci, Svenja Bedenlier & Cengiz Hakan Aydin

- United Kingdom – Anne Gaskell

- United States – Michael Beaudoin

October 2017

BACKGROUND

In 2016 and 2017, online and distance education scholars from 12 countries wrote about the current and future developments in their respective countries for a series of two books by Springer Publishing as part of their “Series on Distance Education”.

The main trends and developments stated in this snapshot are based on these soon to be released books: Open and Distance Education in Australia, Europe and the Americas: National Perspectives in a Digital Age, and Open and Distance Education in Asia, Africa and the Middle

East: National Perspectives in a Digital Age. Both books focus on distance education mainly at the higher education level and are edited by Adnan Qayyum of Pennsylvania State University in the United States and Olaf Zawacki-Richter of the University of Oldenburg in Germany.

The countries and authors are:

- Australia – Colin Latchem

- Brazil – Frederic Litto

- Canada – Tony Bates

- China – Wei Li & Na Chen

- Germany – Ulrich Bernath & Joachim Stöter

- India – Santosh Panda & Suresh Garg

- Russia – Olaf Zawacki-Richter, Sergey Kulikov, Diana Püplichhuysen & Daria Khanolainen

- South Africa – Paul Prinsloo

- South Korea – Cheolil Lim, Jihyun Lee & Hyosun Choi

- Turkey – Yasar Kondaci, Svenja Bedenlier & Cengiz Hakan Aydin

- United Kingdom – Anne Gaskell

- United States – Michael Beaudoin

All of these countries, with the exception of Brazil, have a strong tradition of distance education. The countries chosen represent 51% of the world’s population. As such, these two books provide a picture of major online and distance education trends and opportunities in the world today.

This snapshot puts a spotlight on major developments, challenges and opportunities that are occurring in online and distance education in the countries analyzed.

Major developments

- Online and distance education enrolments are strong and mainly growing

- Existing institutions are increasing their online and distance education offerings

- New institutions are offering online and distance education

- Distance education is an integral part of higher education

- DE is accepted as mainstream in developed countries

Major challenges

- DE is not yet accepted mainstream in many developing countries

- Growing competition among online and distance education providers

- The growing gap between online education and correspondence education

- Legacy infrastructure and sunk costs of distance education providers

- Government policies can still be barriers for distance education provision and innovation

Innovations and Opportunities

- Credit transfer from MOOCs in Russia

- Competency-based education in the United States

- Open textbooks in Canada

- Funding students with grants and scholarships to improve completion rates

- Proactive not reactive quality assurance of distance education programs

- Online educators increasingly taking a business approach

- Private companies buying public higher education institutions

- Public distance education institutions partnering with private businesses

MAJOR DEVELOPMENTS IN ONLINE AND DISTANCE EDUCATION

-

Enrolments

Growth: The growth in enrolments is consistent in countries like Australia, Brazil, Canada, China, Germany, India, Turkey and the United States. In Australia, Canada, Germany and United States enrolments have been growing steadily for most of this decade.

This is due to the demand for increased flexibility by conventional higher education students and the growth of adult learners in higher education. In Brazil, China, India and Turkey, online and distance education enrolments have been growing rapidly. In these countries, there is increased demand for education all levels, primary, secondary and tertiary, especially as demand for a qualified labour force and informed citizenry grows. This is notable because these countries have very different government policies about provision and acceptance

of online and distance education programs, yet all of them are seeing enrolment growth. The trend is a steady increase of distance education enrolments in developed countries and a strong increase in enrolments in developing countries.

Fluctuation: Enrolment numbers have fluctuated up and down in South Africa and South Korea. In South Korea, the Korean National Open University has seen a steady decrease in enrolments for most of the past decade. There have been plateauing enrolment numbers among South Korea’s cyber-universities – fully online universities for the past six years. In South Africa, the enrolments at the biggest DE provider, the University of South Africa, have been increasing for most of this decade with an atypical decrease in 2014 before increasing again in 2015.

Decline: Only in Russia and the United Kingdom have enrolment numbers been decreasing for several years in a row. In Russia, there is a decline in the general population of the country and demand for education as a whole has been contracting. There has been an important split in Russia where there is increased demand for online education and decreased demand for correspondence education. For these two modes combined, enrolments in distance education courses and programs as a whole have declined. In the United Kingdom, government policy changes have led to a decrease in distance education enrolments. In particular, less funding for higher education has resulted in significantly increased fees for students since 2012.

Less adult students and part-time students are enrolling in DE, student groups who have historically constituted an important part of DE.

-

Existing institutions are increasing their online and distance education offerings

Provision of open and distance education courses and programs are available from a growing number of existing higher education institutions. There are two major types of existing providers:

- Existing distance education institutions: Historical providers of DE continue to be important. Institutions that have historically offered distance education have been expanding the number of courses and programs they are offering. In Australia for example, nearly three quarters of all online enrolments are from six universities: Charles Sturt University, University of Southern Queensland, University of New England, Deakin University in Melbourne, Central Queensland University and the University of Tasmania. In India, open universities like the Indira Gandhi National Open University, B.R. Ambedkar Open University, the Karnataka State Open University and the Netaji Subhash Open University continue to be large providers of distance education. In Germany, South Africa, and Turkey long established institutions like FernUniversitat, UNISA and Anadalou University, respectively, continue to be the largest providers of distance education.

- Growth of dual mode (on-campus and online) institutions: A large number of campus based institutions are offering distance and particularly online education. For decades in Russia, most higher education institutions have had distance education units, next to their “direct departments”, historically offering correspondence courses. These universities are some of the main providers of online education. In the past two decades in countries like Canada and the United States, on-campus institutions have become the largest providers of distance education in their countries by becoming providers of online education. But the growth of “dual mode” institutions is happening across the world. For example, the University of Cape Town regularly ranks first among universities in South Africa and indeed all of the African continent. In 2014, they approved the creation of six online distance education programs, with 14 more being planned. In the United Kingdom, there are now so many universities offering online and distance education that government data has not yet caught up to track enrolments in online and distance education in these institutions. In China, high profile campus-based institutions like Peking University, Nanjing University, Sun Yat-Sen University, Beijing Normal University and the Harbin Institute of Technology all offer online education programs.

-

New institutions offering online and distance education

There are two major types of new institutions offering online and distance education: institutions created by universities and institutions created by companies.

Spin-offs: The emergence of online spin-off institutions, from existing higher education providers, is likely most well known in the MOOC world, with Stanford University spin-offs Coursera and Udacity, and EdX as an MIT initiative. However, it is not a recent or MOOC idea. This practice has been occurring for some time. South Korean universities’ spin-offs have had considerable impact on national distance education provision. For example, the Kyung Hee Cyber University is independent but based on the Kyung Hee University in Seoul which has been around since 1949. The Daegu Cyber University was established in 2002 and has close ties with Daegu University, which has been around for over 60 years in Gyeongsang province. Most of the 17 cyber universities, like the Cyber University of Korea and the Seoul Cyber University,

are affiliated with high profile on campus institutions. They were all established after 2000 and are accredited by South Korean Ministry of Education, Science and Technology.

Private companies are the other major new institutions providing distance education. Online and distance education is increasingly provided by private universities – universities not receiving public funding from the government. There are private non-profit and private for-profit educational companies. Distance education is increasingly being provided by private for-profit companies. While the University of Phoenix is well known to many western audiences, it is certainly not the largest private provider of online and distance education courses. Unopar, based in Londrina Brazil has more enrolments and more local learning centers throughout Brazil than University of Phoenix does in the United States. Four groups, UNOPAR, Anhanguera, Estácio and UNIP (Universidade Paulista), have over half of all distance education enrolments in Brazil. Private sector distance education enrolments make up nearly 90% of all DE enrolments, and the four major organizations constitute nearly 60% of the private-sector distance education enrolments in Brazil. Even in Russia, there are more students enrolled in correspondence courses from private institutions than from state universities.

-

Distance education is an integral part of higher education

In most countries in the two books, distance education is an important part of higher education enrolments and provision. On the enrolment side, in Australia, Brazil, Canada, China, India, Russia, South Africa, Turkey and the United States nearly one fifth or more of all higher education students are taking some online or distance education courses and programs. In the United States, the only growth in higher education enrolments is due to growth in distance education enrolments. On the provision side, distance education is not only being offered by open access, or low-selectivity institutions. In Australia, Brazil, Canada, China, Russia, South Korea the United Kingdom and the United States, high profile institutions are offering distance education. They are providing distance education not only for adult learners, but for younger conventional higher education students wanting flexibility. Both from a student and institutional perspective, distance education is increasingly seen as part of higher education.

-

DE is accepted as mainstream in developed countries

As DE has become an important part of higher education, it has gained mainstream acceptance. With the advent of online education in particular, distance education programs have more legitimacy from larger educational institutions, governments and employers. As more high profile educational institutions are offering online education in many countries, this has increased the recognition of distance education and acceptance from employers. Increasingly, governments are also recognizing the importance of distance education. Though there is an immense amount of academic research in online and distance education, basic enrolment and provision data is still difficult to track. In the United States, it used to be difficult to address the question “how many students are enrolled in online courses”, until academics undertook research to answer this methodically. Now it is seen to be important enough that the United States National Center for Education Statistics Integrated Postsecondary Education System is collecting this information. The academic initiative on education enrolments from the U.S. is now being replicated in Canada. In Australia and the United Kingdom, there is increasing awareness that not tracking online enrolments and providers is a gap in data. In most developed countries, distance education in the form of online education, is mainstream.

MAJOR CHALLENGES IN ONLINE AND DISTANCE EDUCATION

-

DE is not yet mainstream in many developing countries

In India and Turkey, it is still a struggle to get distance education programs and degrees recognized as being of equal value as on- campus programs and degrees. In India, this is expressed as concerns about “parity of esteem”. The esteem accorded distance education

is not on par with that of residential degrees. This view comes from government bodies like the University Grants Commission, the Indian higher education regulator, that deems DE providers lack quality programs for both correspondence and online education. Since 2009, this regulator has banned M.Phil. and Ph.D. programs via distance learning. Distance education providers in India have not been able to offer or grow their graduate programs, programs that have proved very successful for distance education providers in other countries. In Turkey, there is technically equality of status between open and distance education degrees and residential degrees. But the equality exists mainly on paper as most employers, especially in white-collar professions, prioritize conventional residential programs. In these countries, DE programs still struggle with an image of offering low- prestige degrees.

-

Growing competition among online and distance education providers

As enrolments have been growing for online and distance education in most countries, so to have the number of providers. The pie is getting larger but there is more competition for it. In every one of the twelve countries analyzed, there are more distance education providers now than there were 10 years ago. There is no indication that the number of educational institutions offering distance education will decrease anytime soon. However, it is not just the quantity of providers that is notable. The nature of the competition also matters. Highly selective institutions are now offering online education like the University of Cape Town in South Africa, Lomonosov Moscow State University in Russia, Peking University in China and Ivy League institutions in the United States like Columbia University and Harvard University’s extension school. Given the profile of these institutions, existing distance education providers now have to also consider “brand” as a part of the competitive landscape.

-

The growing gap between online education and correspondence education

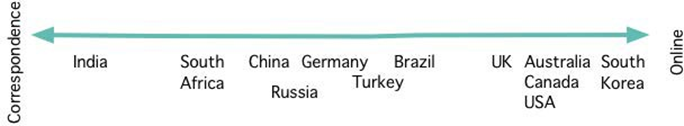

Online education has become the most common form of distance education in many western countries. It is easy to conflate online education with distance education. However, a spectrum of technologies and main delivery formats are used for distance education across the world. The figure below indicates the most common delivery formats by country and how common online education is and is not in the world.

In many emerging economies like India, correspondence education continues to be a critical part of distance education, and to a smaller extent broadcast television and radio is important. This is partly due to access to information and communication technologies. But even in countries where there is access, there is a significant split between offering distance education via correspondence and via online. In Russia, institutions providing online education separate themselves from correspondence education. Online education is referred to as distance education (distantsionnoe obrazovanie) and includes elements of e-learning, blended learning and flexible learning. This is done to distinguish modern distance education from correspondence education which is associated with the Soviet system and has negative connotations. Educational institutions are investing in distance education but distancing themselves from correspondence education. In Turkey, distance education is also often associated with education of lower quality.

-

Legacy infrastructure and investments of distance education providers

Some DE providers continue to be committed to correspondence education not because they are opposed to online education, or lack of technology access, or because of stubbornness. These institutions have long existing infrastructure that supports correspondence education. The challenge is how to decide what formats to use for course production and delivery when there are so much sunk costs for particular formats. For example, the University of South Africa, the largest DE provider in South Africa, has huge buildings for printing course materials. Any financial calculations about future programs needs to include these sunk costs that may make it more financially beneficial to continue with correspondence education. But sunk costs are also an issue for online education. Many institutions are using a course management system that is still similar to WebCT and ANGEL. But they are not able to move to a different platform because they have so many sunk costs in the current platform and there is a prohibitive cost of transferring to a new system.

-

Government policy barriers

Online and distance education is subject to government regulations in most countries like India, South Africa and Turkey. In Brazil, all distance education programs must be approved by the Ministry of Education. The documents submitted for approval are used to evaluate the curriculum, student selection policy, student admission numbers, ongoing student evaluation, attendance control, qualifications of the teaching staff, library and laboratory facilities, and partnerships with other groups. These have to be approved every five years. In China, government regulations are administered by different levels of national and local educational authorities, and include access regulations, price regulations, quality regulations and information regulations. These barriers can slow the provision of distance education. Or as seen in India, regulators prevented any provision of graduate programs via distance education.

OPPORTUNITIES AND INNOVATIONS

In the face of these the challenges, online and distance education providers have not been standing still. They know there are many opportunities to be innovative in the context of the all of these changes and have undertaken many notable initiatives.

-

Credit transfer from MOOCs in Russia

In 2015 eight of the leading universities in Russia created the Open Education Project. The universities involved include the two elite “autonomous universities” Lomonosov Moscow State University and Saint Petersburg State University. Both have a special legal status above the general education laws in Russia. Each of the eight institutions have invested 50 million rubles in the initiative (roughly over US$850,000 at the current exchange rate). The Open Education project has about 200 courses and modules, nearly all of which are part of university programs and required in their respective disciplines. Certificates from the Open Education project can be transferred into university credits by students studying in Russian universities. It should be noted that taking the courses is free but there is a charge for issuing certificates.

-

Competency-based education

The University of Wisconsin has historically been a leader and innovator in distance education in the United States. They are currently a leading provider of online education too. Recently, University of Wisconsin has initiated competency-based education for their programs. Students can work at their own pace, not in a cohort. They are assessed on the skills or competencies gained from their course or from what they already know. When they pass their assessments, they can receive credit and move on to their next courses. Courses and completion are not bound to the semester schedule or a paced syllabus. Students are not limited to “seat time” in their progress through their programs. This is an important way of recognizing and accrediting knowledge that students may already have or have learned in work, other places. University of Wisconsin uses academic success coaches who help students develop personalized learning plans and help them prepare for their assessments. Competency- based education can be used for completion of certain certificates or degrees. It can reduce the amount of time to completion and cost to completion for students.

-

Open textbooks

In Canada, the province of British Columbia initiated an open textbook initiative through its organization BC Campus that coordinates the development of online courses and open educational resources. The textbooks are created by faculty in the province for core college and university level courses. By the end of 2015, 136 open textbooks were adapted or created. The books are available in several e-book formats and can be downloaded for free by students, based on Creative Commons licensing. The textbooks save students money but also allows for online courses to be fully online. The province of BC is now working with several other provinces to start and manage their own open textbook projects. Open Textbooks are also being written by academics at Athabasca University in Alberta, an institution that is interested in fully online courses with no physical mail delivery required, not even books.

-

Funding students with grants and scholarships to improve completion rates

Providers of online and distance education have rightly been concerned about persistence and completion rates of distance education students. Most of the focus on improving students’ persistence and completion rates has been focused on improving the quality of distance education courses and offerings, through design, teaching, and administrative and student support. Penn State University’s online group, World Campus, has undertaken an

initiative to provide online students with small scholarships. Students, especially from low-income backgrounds, can struggle with financial insecurity while taking courses. To help address this, some institutions have offered what has been called “completion grants” or “retention grants” to help students who might not continue, or take longer to complete their programs because they were unable to pay tuition and fees. Retention grants are usually small amounts of money per student. Early research for retention grants in on-campus programs suggests it can be very helpful for students struggling financially. Penn State World Campus already has the highest completion rate of any online provider in the United States at nearly 90%. Yet they are trying to improve this completion rate and time to graduation by offering small scholarships to online students that may be at risk of not completing or finishing their programs. The small scholarships and grants at Penn State serve as a kind of like micro-finance for education purposes.

At a larger scale in Brazil, private institutions can get funds from the federal Ministry of Education to lend to students in financial need. The funds, called ProUni-University for All Program, and FIES-Fund of

Student Financing, are for students to continue their studies. These funds are meant to be loans but are often grants, as many students who seek such loans are studying to be school teachers. The law allows that in such cases the loan does not need to be repaid.

-

Proactive, not reactive, quality assurance of distance education programs

Distance educators in many countries worry about the quality of distance education programs. They are often required by government policies or boards to review the quality of programs and are responding to these reviews and regulations by changing administration, design, teaching and support. In the United Kingdom, the Open University has always been proactive about addressing quality issues. Historically, the guidelines for good practice and using quality audits did not apply to distance education. The Open University adapted the standard guidelines anyway and applied them to distance education courses. They recognized that distance education providers often have to do twice the work to get half the acknowledgement. This proactive approach is well received by funding bodies and regulators. Now, the Quality Assurance Agency assures standards and quality for all higher education in the United Kingdom, including distance education. The use of continually new types of information and communications technologies suggest there is always room for distance education institutions to be proactive in their approach to providing and assuring quality programs and courses.

-

Online educators taking a business approach

In the face of increased competition of new providers that are for- profit businesses, some existing educational institutions are taking a strongly business approach. The University of Southern New Hampshire in the United States takes an aggressively “student as consumer” and “customer is always right” approach in their delivery of online educational programs. They even have degree presentation ceremonies for the students at their homes where their family can be present.

The increasing amount and type of competition in distance education can be a challenge for some institutions. Educators have historically had a strong collegial dynamic. The very word collegial has the same origins as college – collegium, meaning group in which members are relatively equal in power. While there has always been a competitive element to education for students, the new entrants in online and distance education challenge open universities and other distance education providers to develop new, competitive, strategic plans.

-

Private companies buying public higher education institutions

Kroton Educacional is a private business that was created over 40 years ago with a focus on primary and secondary education in Brazil. They have adapted their business over the decades as the trends and demands for education have grown. They saw the growth and potential of distance education in Brazil. Kroton also knows that reputation is important in education and that students want their degree provided by institutions that will still be existing throughout students’ work careers. So Kroton did not build a new university or institution to provide distance education. They bought existing ones, that already had reputations, and increased their focus to distance education. In 2011, Kroton Educacional bought Unopar. In 2014, Kroton also bought Anhanguera. These are the two largest providers of distance education in Brazil. Private providers like Kroton are trying to provide distance education spaces and programs that public educational institutions, for various reasons, have not been able to offer.

-

Public distance education institutions partnering with private businesses

For decades, academic institutions have been partnering within and across borders, with other universities, to offer joint degrees and programs via distance education. Increasingly, these partnerships are also including businesses. In South Africa, the Open Learning Group is an accredited private higher education institution. But it also partners with two public higher education institutions. For example, the Open Learning Group collabourates with the unit for Open Distance Learning at the University of the North-West. They provide “logistical and administrative support to students” in some programs. They also provide online elements consisting of interactive whiteboard sessions tutoring, discussion forums and chats with students and lecturers, electronic access to digitized materials, and online assessment through Moodle. Another example is in China, where Peking University has partnered with Alibaba Group, an e-commerce company that is also the largest retailer in the world. They have partnered to co-create a platform for Chinese MOOCs.